29 September 2004

Bloglines API may provide scalability for RSS

This posting discusses the issue of RSS scalability (popular RSS sites can get hammered by requests thus making RSS hard to scale) and describes Bloglines new API that allows programmersto use Bloglines as a cache for popular feeds O'Reilly Network: The New Bloglines Web Services

Useful resources for explaining RSS

Here's a nice post Explaining RSS. Includes links to a vedeo and a resource links page.

UN ICT Task Force publishes "Internet Governance: A Grand Collaboration"

This CircleID posting Fifth Publication of the UN ICT Task Force Series links to the UN ICT Task Force publication Internet Governance: A Grand Collaboration (this page has a link to the PDF itself). This is good source material for grounded debate on issues like the digital divide and other issues about the use of Internet throughout the world.

Internet Search Volume by Type of User

John Battelle posts a blog entry John Battelle's Searchblog: Search Volume by Type of User with a preview of some slides from Gian Fulgoni, founder of Comscore analysing how users use Internet search engines. Very interesting..... "the heaviest users of search, who are a minority of total search users, account for the vast majority of search queries. This seems a case where the tail is not as powerful as the head....And clearly, the more folks use the web, the more they use search. What happens when the majority of us are heavy users of search? Time to buy more servers...."

28 September 2004

EU Propotes OpenOffice standards

The EU is prompoting Open Office file formats as standards as documented in this interesting blog entry: ongoing キ Smart EC

This could lead to these standards being ratified by the ISO, which is a good thing, in my opinion. It wouldbe prefereble to have a vendor-neutral open standard for document exchange, rather than relying on de facto standards using propietory formats.

23 September 2004

Web 2.0 Conference (O'Reilly)

This looks like a good conference: John Battelle's Searchblog: Web 2.0 Draws Near

21 September 2004

CircleID's Channels

The list of CircleID's channels map out the key issues facing the Internet today. The articles in these channels can be accessed via the web portal or via RSS feeds.

CircleID ChannelsAs a crucial part of Internet's core functionality, the global directory structure, better know as the Domain Name System, has been a subject matter filled with technical and political challenges that have grown (and continue to grow) into numerous specific branches. CircleID channels identify key Internet related 'tension points' that members are invited to discuss, write about, comment on, analyze, offer solutions for, criticize, and gain value from.

Internet Governance

Since the creation of the Internet Corporation for Assigned Names and Numbers (ICANN), the regulation of the Internet has become a heated political theme. Internet users have criticized every aspect of governing bodies from questioning the reasons for the existence of such institutes to the nature of the policies put in place and the methods used to achieve them. Others defend the existence of centralized governing organizations and the involvement of government. How do we reach consensus in a borderless geography and open frontier?Empowering DNS

Domain Name System (DNS) is a global directory designed to map names to Internet Protocol addresses and vice versa. DNS contains billions of records, answers billions of queries, and accepts millions of updates from millions of users on an average day. Security and robustness of DNS determines the stability of the Internet and its continuous enhancement will remain a critical factor.Top-Level Domains

Top Level Domains (TLDs) divide the Internet namespace into sectors and geographical regions. Management and use of existing TLDs, as well as the introduction of new generic Top Level Domains are matters that are still evolving and in need of good planning and sound decisions.Registrars

Much like other Internet ventures, the business of buying and selling domain names is passed the so called gold rush and the bubble period and is now moving towards maturity and viability. The initially simple domain name registration process, handled by one organization, is now a global industry with fierce competition and intense price wars. It is filled with over one 100 accredited registrars, thousands and thousand of resellers, is facing controversies over a growing second-hand market and gaining many other specialty services. The naming business is indeed significant business!Legal Issues

Today domain name disputes are daily occurrences that involve domain name thefts, Uniform Domain Name Dispute Resolution Policies (UDRP), cybersquatting, typosquatting, trademark protections, and more. They are issues that have caused regional and international concerns. Others have challenged the very laws that dictate the decisions made in courts. Is it time to call for yet further legal enforcements or time to question the law itself?Addressing Spam

While spam continues to be a major issue for all individuals and organizations on the Internet, increasing levels of effort are made to fight this problem from every possible angle -- technically and legally. Some however argue that the best way to fight spam is through Internets very core infrastructure. In other words, we need to have closer look at the current state of Internet Protocol addressing and the domain name system.Privacy Matters

When it comes to the Internet, the definition of Privacy tends to be an ambiguous concept that has been difficult to reach consensus on. Issues related to the collection, maintenance, use, disclosure, accuracy and processing of private information are surrounded with numerous types of debates. What is the true cost of privacy to individuals, society and to the business world? Regardless of what is decided today and tomorrow one thing is for certain; privacy matters!IP Address & Beyond

Even the best Internet visionaries in the early 1980s could not imagine the dilemma of scale that the Internet would come to face. With realistic projections of many millions of interconnected networks in the not too distant future, the Internet faces the dilemma of choosing between limiting the rate of growth and ultimate size of the Internet, or disrupting the network by changing to new techniques or technologies. Whether it is still too early or not, the shift has begun from the current Internet Protocol (IPv4) to IPv6, the next generation Internet protocol. Consequently, where are we today with IPv4? What does tomorrow hold with IPv6? And how do we deal with the challenges in the road in between?ENUM Convergence

The first and most notable convergence protocol developed to make telephone numbers recognizable by the Domain Name System (DNS). ENUM or Electronic Number Mapping offers the potential for people to be contacted through multiple media channels such as email, fax and mobile phones. While many see ENUM convergence as a significant progress, others have raised questions concerning the types of services to be offered, privacy and security issues. So what are the future implications? Welcome to the technological, commercial, and regulatory world of ENUM convergence.Internationalized DN

Today, many efforts are underway in the Internet community to make domain names available in character sets other than ASCII. As the Internet has spread to non-English speaking people around the globe, it has become increasingly apparent that forcing the world to use domain names written only in a subset of the Latin alphabet is not ideal. Internationalized Domain Names (IDN) is considered a global trend and is likely to be adopted by a large number of websites.Exploring Frontlines

To date, millions of domain names have been registered around the world. A high percentage of domain names registered remain unused and many others serve the purpose of protecting corporate trademarks. There are also a growing number of domain names that are being used in creative ways that leverage the true power of the Internet. What is really going on in the frontlines of the cyberspace?

Can TCP/IP Survive?

This is an article coming out of the 'Internet Mark 2 Project': Can TCP/IP Survive?

The following article is an excerpt from the recently released Internet Analysis Report 2004 - Protocols and Governance. Full details of the argument for protocol reform can be found at 'Internet Mark 2 Project' website, where a copy of the Executive Summary can be downloaded free of charge. The report also comments on the response of governance bodies to these issues........

Assessment

TCP -- if not TCP/IP -- needs to be replaced, probably within a five to ten year time frame. The major issue to overcome is the migration issues (see below)

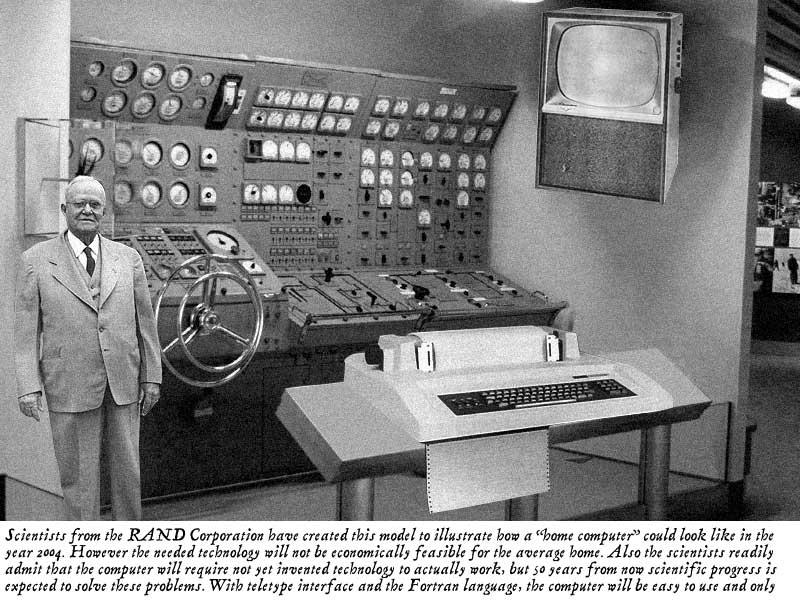

1954's Home of the Future

In 1954 RAND predicted that this would be the Home Computer of 2004, and would be easy to use and programmed in Fortran! Ummm.

Thanks to: Metafilter.

UPDATE 2004-09-29 Okay it looks like this was a hoax; still pretty funny though RAND Hoax: "The picture is actually an entry submitted to an image modification competition, taken from an original photo of a submarine maneuvering room console found on U.S. Navy web site, converted to grayscale, and modified to replace a modern display panel and TV screen with pictures of a decades-old teletype/printer and television (as well as to add the gray-suited man to the left-hand side of the photo)"

Tim Berners Lee (Interviews on Internet.com)

I've been reading John Battelle's searchblog now for about a month and it's definitely staying on my blogroll. This recent posting alterted me to an interesting interview: John Battelle's Searchblog: Tim Berners Lee Interviewed

It is now nearly 15 years since Tim and his colleagues in CERN developed initial prototypes of what we know know as the Web. I remember well the first time I heard of it at the Network Services Conference, October 1992 in Pisa, Italy (the call for participation is still on-line: NSC92). This was for European academic networking community including a nice mixture of librarians and IT folks. I was blown away by two talks: John S. Quarterman's talk on Internet growth (which was pretty impressive even prior to the Web), and Jean-Francois Groff's talk on "World-Wide Web: global hypertext coming true" that was written by Tim, Jean-Francois and another of their colleagues in CERN, Robert Cailliau. Jean-Francois described the clients that were available or planned: dumb, PC, Mac, X-Windows and NeXt Cube. We could try it out a text-based client by telnetting to info.cern.ch (128.141.201.74)! It wasn't long after this I got into Linux (SLS and then Slackware) and deployed a CERN httpd server in University College Galway, Ireland where I worked at the time. Later I deployed the UCG official webserver.

As one would expect, the interview with Tim focsues on the emerging semantic web, his vision of where the Web is going. Interestingly he is also very excited about the mobile web, the area where I am now working with colleagues in the Telecommunications Software & Systems Group.

I still beleive that good research in communications software requires active collaboration and co-oeration between different academic disciplies. For the Web it was librarians/archivists and computer scientists. Now it is these plus telecommunications engineers and many other disciplines. The challenge is to learn from our experiences in all these domains and aggregate knowledge to produce really usfeul systems based on simple principles. It can be done, just look at the Web!

For more infromation see the W3C: A Little History of the World Wide Web and CERN: What are CERN's greatest achievements?.

mLearning (Mobile Learning) in Korea

Well, I had predicted that it would happen: mobile devices being used to enhance the learning environment, in this case with the introduction of a Personal Multimedian Player (PMP). As usual these days in the mobile world, I suppose, the trend is being led up by Korea: Smart Mobs: Mobile learning that links to a Korea Times report stating that students can: "use personal gadgets to study instead of textbooks on the bus or subway." I wonder if they're IPv6 devices, it sounds from the report that they are more like MP3 players with video that are not always on-line. The same device seems to be marketed as an iRiver PMP-100 outside Korea (that's the picture).

EMusic: Lesser known music distribution

A site called EMusic tries to be an independent distribution channel for music The New York Times > Technology > A Music Download Site for Artists Less Known

17 September 2004

RSSify a directory

Andrew Grumet has written a tool to turn a directory into an RSS feed so that subscribers can see and download new files as they appear Andrew Grumet's Weblog: Version 0.3 of dir2rss

16 September 2004

Bellheads and Netheads

A recent Jon Udell article alerted me for the first time to the terms "Bellheads" and "Netheads" to refer, respectively, to telecoms people and computer network people. Jon refers back to an old posting of his own that quotes this report: Netheads versus Bellheads

Research into Emerging Policy Issues in the Development and Deployment of Internet Protocols Final Report For the Federal Department of Industry (Contract Number U4525-9-0038) T.M.Denton Consultants, Ottawa with Fran輟is M駭ard and David Isenberg. This report is no no longer on-line (but is archived PDF on archive.org). The terms live on live on the Internet in this set of slides/pages Netheads versus Bellheads.

The research centre I work in, the TSSG, is all about the convergence of these technologies, particulary in the mobile internet (2.5G, 3G). We try and have a good mix of Bellheads and Netheads!

Nokia and NEC test IMS (mobile IP)

Two industry heavyweights have completed interoperability tests of a new technology designed to drive the convergence of voice, data, and video services over wireless and fixed infrastructure based on the Internet Protocol.

Perl for XML parsing

A useful up-to-date summary of XML.com: Perl Parser Performance by Petr Cimprich on O'Reilly's http://www.xml.com.

15 September 2004

Perl for .NET

The afternoon started of with a session on Perl for .NET Environment by Jonathan Stowe. I assume Jonthan will update his computing links page with details of this talk in time :-)

Notable in the audience was a strong presence of ActiveState dudes, well known for their excellent support of Perl (and other dynamic languages like Python and Tcl) for Windows platforms, and sell excellent tools, and give stuff away as open source as well. I'm sure this topic is key for them.

There was a brief discussion of reuse, and the obvious fact the the Comprehensive Perl Archive Network (CPAN) is the word's largest open library of reusuable code was mentioned. The stats today are: online since 1995-10-26; 2507 MB 260 mirrors; 3863 authors 6893 modules.

Jonathan presented an overview of .NET and discussion of why interoperability was a priority for the Perl community (and indeed for all software). I think the audience was receptive, after all Perl has always defined itself as a glue language linking other software components in useful ways to improve funtionality.

His basic structure was to say that there are three ways to interoperate:

- Exchanging XML Documents (datasets, xml serialisation, configuration)

- HTTP Remoting (serialisable objects, marshalbyref objects)

- Web Services

One of the easiest ways for Perl to interoperate is to have Perl serialise an object into an XML structure (using XML::Simple) and use this to exchange data with other .NET (or indeed any web service). However, there are limitations with this approach.

Jonathan did mention that a problem people have is assuming that they can call remote constructors and then they make assumptions about what they can access remotely, whereas all this actually depends on the implementation of that remote "object". Personally, I like to think of web services as not being at all like CORBA (remote object references) but as being essentially a message passing mechanism without any assumption that you ever comunicate with the same remote object. If you never think of it as communicating with a remote object, then you are less likely to make these conceptual errors.

YAPC::Europe (Belfast)

I am attending my first Perl conference: YAPC::Europe in Belfast (YAPC means Yet Another Perl Conference). This is quite fun for me as I have had a long interst in Perl (both for UNIX/Linux system administration, and for web programming) but haven't had as much time to spend on it recently. I use lots of Perl (like the MovableType scripts that power this weblog) but do not write much code at the moment.

The keynote address was by Allison Randal of The Perl Foundation that was a good update on Perl (Perl5 and Perl6 release news), Parrot (the new Virtual Machine for non strongly-typed languages like Perl) and Ponie (bridging Perl5 and Perl6).

There was an excellent talk on HTTP:Proxy by Philippe 'Book' Bruhat. It looks to me like this could be deployed as an IPv4 or an IPv6 proxy (this dependency is on LWP rather than Philippe's module). He uses it himself on his own machine to do the usual advertisemnt stripping, but can change character sets, and customise the appearance of any external website. This means one can view all web-based material based on one's personal preferences. His examples forcused on the Dragon Go Server (i.e. on-line playing of the Japenese/Chinese game of Go) which he customised to supress the text message box (as it made the submit button too far down the page) unless he explictly requested it by clicking on the remaining link. It can also be used to log personal browsing habits for potential data mining later. The system is based on small filter scripts (mainly using regular expression matches and substitions) that looks easy enough to master. This is a very flexible tool for web-based material, or as he says himself "All of your web pages belong to us!"

14 September 2004

Linux standards base Version 2.0 has C++ support

It is good to see development of the LSB to avoid fragmentation of the Linux development platform: InfoWorld: Linux standards base Version 2.0 has C support: September 13, 2004: By Ed Scannell : APPLICATION_DEVELOPMENT : APPLICATIONS : NETWORKING : WEB_SERVICES This could help make the Linux platform a viable target for developers, and ensure that by following a single standard that deployment is possible on all compliant Linux distributions (most have signed up).

12 September 2004

Valid XHTML/HTML

I just re-edited my homepage, TSSG WIT: Mícheál Ó Foghlú's Home Page, to make it HTML 4.01 Transitional valid. Not too much trouble. However to make this weblog itself (i.e. the index pages and entry pages) XHTML 1.0 Transitional valid is much trickier as there are often "&" symbols buried in URLs that I just pasted in when I posted a link (and the odd one I typed myself inadvertantly). I've left it on the long finger for now.

6 September 2004

Mod_pubsub

An interesting project on enabling easyly deployable Publish & Subscribe mechanisms using client-side Javascript in a browser, serv-erside mod_perl on Apache, and HTTP as a transport mod_pubsub Project FAQ

Old Internet Services Predictions

I like this list of Trend Wars from IEEE Concurrency Vol. 8, No. 1, January - March 2000

It clearly shows how wireless access technologies were poised to be the next big thing in 2000. You could almost say exactly the same today, but perhaps be less cagey about 3G as it has firmed up quite a bit.

The article is based on interviews with a number of experts:

Eric A. Brewer is an assistant professor at the University of California, Berkeley in the Department of Computer Science. He cofounded Inktomi Corporation in 1996, and is currently its chief scientist. He received his BS in computer science from UC Berkeley, and his MS and PhD from MIT. He is a Sloan Fellow, an Okawa Fellow, and a member of Technology Review's TR100, Computer Reseller News' Top 25 Executives, Forbes "E-gang," and the Global Economic Forum's Global Leaders for Tomorrow.

Fred Douglis is the head of the Distributed Systems Research Department at AT&T Labs- Research. He has taught distributed computing at Princeton University and the Vrije Universiteit, Amsterdam. He has published several papers in the area of World Wide Web performance and is responsible for the AT&T Internet Difference Engine, a tool for tracking and viewing changes to resources on the Web. He chaired the 1998 Usenix Technical Conference and 1999 Usenix Symposium on Internetworking Technologies and Systems, and is program cochair of the 2001 Symposium on Applications and the Internet (SAINT). He has a PhD in computer science from the University of California, Berkeley.

Peter Druschel is an assistant professor of computer science at Rice University in Houston, Texas. He received his MS and PhD in computer science from the University of Arizona. His research interests include operating systems, computer networking, distributed systems, and the Internet. He currently heads the ScalaServer project on scalable cluster-based network servers.

Gary Herman is director of the Internet and Mobile Systems Laboratory in Hewlett-Packard Laboratories, Palo Alto, CA, and Bristol, UK. His organization is responsible for HP's research on technologies required for deploying and operating the service delivery infrastructure for the future Internet, including the opportunities created by broadband and wireless connectivity. Prior to joining HP in 1994, he held positions at Bellcore, Bell Laboratories, and the Duke University Medical Center. He received his PhD in electrical engineering from Duke University.

Franklin Reynolds is a senior research engineer at Nokia Networks and works at the Nokia Research Center in Boston. His interests include ad hoc self-organizing distributed systems, operating systems, and communications protocols. Over the years he has been involved in the development of various operating systems ranging from small, real-time kernels to fault-tolerant, distributed systems.

Munindar Singh is an assistant professor in computer science at North Carolina State University. His research interests are in multiagent systems and their applications in e-commerce and personal technologies. Singh received a BTech from the Indian Institute of Technology, Delhi, and a PhD from the University of Texas, Austin. His book, Multiagent Systems, was published by Springer-Verlag in 1994. Singh is the editor-in-chief of IEEE Internet Computing.

The interview is based on the following questions:

Internet Technology Questions

1. In retrospect, what were the decisive turning points for Internet and WWW technology to become ubiquitous and pervasive?

2. What are the next likely disruptive technologies in Internet space that might make new marks in the way we live and work?

3. What are the most important technologies that will determine the future Internet's speed and direction?

4. Where do you see the roles of industry, startups, research labs, universities, "open source" companies, and standard organizations in shaping the future Internet?

5. What will be the major application areas dominating the Web?

6. What is the most controversial and unpredictable technology in the Internet space?

Why not take this prediction test yourself today in September 2004?