3 June 2011

Android: FeedHenry Apps

mofoghlu's FeedHenry Apps

4 total, 4 free (100%), 0 paid (0%), 7MB total size, $0 total price

View this Android app list on AppBrain

26 May 2011

HTML5 Last Call May 2011

The next generation of web standards made significant progress this month as the W3C announced that the draft HTML5 specification has been opened up to a "Last Call" for comments from members of the W3C, and the wider community. This is on target for the schedule the W3C set last year for HTML5, and is based on the assumption that the priority is to get a recommended HTML5 specification as soon as possible.

16 May 2011

Semantic Web: RDFa

![]()

Whilst attending the World Wide Web Consortium Advisory Committee meeting in Bilbao (on today and tomorrow), I realised that one small part of the semantic web initiative, pushed for many years by the W3C, has started to see wide scale adoption.

RDFa is now claimed to be embedded in 3.6% of all public URLs (October 2010), and growing fast. Yahoo! led the way with SearchMonkey indexing, Google followed with rich snippets, and FaceBook have used it to enable their open graph protocol.

For more information see:

9 May 2011

The China Post: Europe set to put data in the cloud

Europe set to put data in the cloud – Google Apps for Business.

BRUSSELS–The American computer scientist John McCarthy — who coined the phrase “artificial intelligence” — predicted in 1961 that computing power may someday become a public utility, much like electricity or water.

The idea that you could flick a switch for data-crunching was as futuristic at the time as humanoid robots or flying cars.

But 50 years on, a robot has won the U.S. quiz show “Jeopardy,” multiple jet-propelled skycars are under development and McCarthy’s computing vision is slowly taking shape.

The idea of siphoning computing power from afar — a concept called “cloud computing” — has been in active development for much of the past decade, with companies such as Google, IBM and Amazon playing a central role.

Now the European Union is rapidly adopting the idea, hoping that it can streamline businesses, rid the 27-country union of overlapping infrastructure and ultimately save time and money.

But it’s also causing intense headaches for EU regulators, who are troubled by issues of privacy and jurisdiction, including tough questions about who owns information and who bears responsibility for how European laws are applied.

Anyone who uses Gmail, Flickr or other services where the data are not saved on their computers is already taking advantage of cloud computing. Businesses are increasing switching their entire networks to such Internet-based systems.

While it saves money, it also means that personal data can essentially be stored anywhere in the world and that the ability to reach them depends solely on the cloud provider working properly. It’s a potential privacy and logistical nightmare.

Still, the economic argument is striking: The Centre for Economics and Business Research predicts that Europe’s five largest economies could save 177 billion euros (US$257.1 billion) — roughly the output of Ireland — each year for the next five years if all their businesses were to switch over at the expected rate.

In response to the new business possibilities and in an effort to head off the impending privacy concerns, the EU executive is putting together its first cloud-computing strategy. The target for completion is next year, and there’s a sense of urgency to get ground rules in place.

“Normally I prefer clearly defined concepts,” Neelie Kroes, the EU’s top official responsible for information technology and the digital agenda, said as she announced the unveiling of the EU’s cloud strategy in January.

“But when it comes to cloud computing I have understood that we cannot wait for a universally agreed definition. We have to act.”

Compared to the United States, Europe has been a slow adapter to the new technology.

Last year, western Europe accounted for less than a quarter of the US$68 billion spent globally on cloud-computing services, according to technology research consultancy Gartner. The United States occupied nearly 60 percent of the market.

That leaves plenty of room for the technology to expand in Europe, but it’s the privacy issue that is likely to prove the biggest hurdle to a rapid and successful expansion.

One significant problem is that there’s no way for a user to verify where their data are sitting, whether on a server in Sao Paolo, Siena, Singapore or Seoul.

This raises a particular problem for EU member states, who under EU law can only send personal data outside EU borders if the receiving country meets “adequate” privacy standards.

It’s also unclear under EU regulations whose privacy laws would apply in any dispute where the end-user, the cloud-provider and the actual data servers are all in different countries.

For that reason, the EU’s 27 member states are first trying to align their privacy laws and close jurisdictional gaps.

“This is a necessary condition for cloud computing to be effective in the near future,” said Daniele Catteddu, a communication security expert working on the EU’s cloud computing strategy.

“The major obstacles are legal barriers, the enormous levels of bureaucracy, the difficulties of being compliant with 27 different sets of rules,” he said.

One Sunday last February, tens of thousands of Gmail users opened their e-mail accounts only to find them completely empty — data stored in the cloud had temporarily disappeared.

“In some rare instances, software bugs can affect several copies of the data. That’s what happened here,” Google’s vice president of engineering explained on the company’s blog.

Although the data were recovered, it was a jolting reminder that using the cloud means giving up control and that the technology is only as good as its stability and reliability.

Similar incidents have happened to businesses. In 2006, the British-Swedish gaming services company GameSwitch lost access to its software and data following a police raid on a different company that happened to use the same data center.

“It basically comes down to the degree to which you trust the cloud-provider,” said Giles Hogben, a communication security expert for ENISA, the EU’s Internet security agency.

Determining whether to trust can be tricky for customers because providers are wary of disclosing their exact security infrastructure, arguing that to do so would make them more vulnerable to cyber-attack, Hogben said.

Customers also do not have much bargaining room with cloud providers, according to a study conducted at Queen Mary, University of London. As with electrical companies or other utilities, it’s “take it or leave it.” EU regulators have taken note of the potential issues.

“We can’t simply assume that voluntary approaches like codes of conduct will do the job,” Kroes said in a speech last month. “Sometimes you need the sort of real teeth only public authorities have.”

However the regulation shakes out, big computing companies like Microsoft say cloud computing will be the next big thing for Europe and the rest of the world.

“It really is the future. All of our products will run on the cloud,” Microsoft associate general counsel, Ron Zink, told Reuters this month.

Zink said 70 percent of Microsoft’s research and development funds were already devoted to cloud computing, with the figure expected to rise to 90 percent soon.

Kroes is also pushing for more of Europe’s public sector to switch to cloud computing, following the United States, which is planning to close 800 of its 2,100 data centers, almost 40 percent, by 2015 as part of a new “cloud-first” policy.

“I want to make Europe not only ‘cloud-friendly’ but ‘cloud-active’,” Kroes said.

The aim and the ambition are there, but negotiating the legal and privacy maze may take time.

15 April 2011

28 March 2011

Cross-Platform Mobile App Development

Retrospection: Cross-Platform Mobile Development at EclipseCon - Heiko Behrens (Blog)

I met Heiko recently in Kiel, Germany, where we were both presenting our own visions of cross-platform mobile app development to a local chapter of the ACM. I was very impressed with his bredth of knowledge and his analysis of the field.

This blog post covers his most recent session at EclipseCon this month.

Now, in my view, the hybrid approach of using web technologies for the user interface, and generating local code to integrate into the features of the mobile device (camera, local storage, contacts list, accelerometer, compass, GPS, and so on), as FeedHenry does, is the best medium to long term bet. Web technologies have proved again and again that they can solve cross-platform UI issues, and they must be the safest bet. I'd much rather learn standard JavaScript, CSS, and HTML/HTML5, with a few extra local API calls to get at the local device's features, than learn a limited subset of JavaSCript or some new invented DSL language, when developing mobile apps.

2011-03-28 @ 15:42 Heiko Behrens responds:Thank you for the reference, Mícheál.

I fully agree on the long term: As with the desktop mobile web technology eventually will be able to do real time rendering and tight integration with the host system. At the moment though, neither desktop browsers nor their mobile counter parts can even access a webcam or the built-in camera with pure web technology. It will take some time to catch up with native capabilities. And as we go, a significant amount of outdated browsers (such as WP7) ask for compromises.

We'll see what the future holds...

7 March 2011

University of Limerick iPhone app for Diabetes

Interaction Design Centre - Computer Science and Information Systems Department - University of Limerick - Limerick, Ireland

Tag-it-Yourself™ is a journaling platform that supports the personalization of self-monitoring practices in diabetes. TiY is developed at the Interaction Design Centre (www.idc.ul.ie) and is part of a broader research project called FutureCOMM, which is funded by HEA Ireland under the 4th PRTLI program.

The TSSG led the FutureComm project, and UL's IDC were partners. This is a very interesting output of the research programme. Now allI have to do is persuade them to migrate to Feedhenry to allow it work across multiple devices sucha s Android, Blackerry and Nokia WRT as well as iPhone.

10 February 2011

Native Apps versus HTML5 - good TechCrunch article

HTML5 Is An Oncoming Train, But Native App Development Is An Oncoming Rocket Ship

This article argues that at best lip service, and at worst derision, is being paid to web apps and HTML5 apps, despite an underying beleif that HTML5 is the way of the future. The debate is summarised in terms of the major platforms: Apple (fully native for iPhone and iPad), Google (half in half for Android), Facebook (fully HTML for Facebook).

FeedHenry's offering is effecively a halfway house between the two, as the FH client API wraps the local device functionality, and allows the resulting app to delivered via app stores as a real app, despite using web technologies rather than native development languages to build.

18 January 2011

W3C Unveils HTML5 Logo

The W3C have unveiled a HTML5 Logo: W3C News Archive: 2011 W3C

W3C unveiled today an HTML5 logo, a striking visual identity for the open web platform. W3C encourages early adopters to use HTML5 and to provide feedback to the W3C HTML Working Group as part of the standardization process. Now there is a logo for those who have taken up parts of HTML5 into their sites, and for anyone who wishes to tell the world they are using or referring to HTML5, CSS, SVG, WOFF, and other technologies used to build modern Web applications. The logo home page includes a badge builder (which generates code for displaying the logo), a gallery of sites using the logo, links for buying an HTML5 T-shirt, instructions for getting free stickers, and more. The logo is available under "Creative Commons 3.0 By" so it can be adapted by designers to meet their needs. See also the HTML5 logo FAQ and learn more about HTML5.

The logo itself is not the main important thing, it is the richness of HTML5's features that help make media (audio and video in particular) easier to access in a standardised way on the web.

HTML5 also promises to help level out the differences between the various mobile devices, providing a common web-based layer of functionality that can be targeted by all mobile apps. Currently companies like FeedHenry (where I am CTO) offer a client-side API abstraction that helps cross-platform mobile app development and deployment, utilising web technologies. HTML5 will hopefully make more of these differences irrelevant over time, making it even easier to develop cross-platform mobile apps that work across a wide range of devices, using standardised web technologies (approved by the W3C).

The TSSG (where I am Executive Director Research) are members of the W3C and support these standardisation activities.

UPDATE 2011-02-21 Ian Jacobs of the W3C has blogged on the interesting discussions the unofficial release of the logo to the public has generated, see Ian Jacobs' Blog Entry.

29 December 2010

A good statement of challenges for cloud computing in 2011

Research Report: 2011 Cloud Computing Predictions For Vendors And Solution Providers

The authors present the challenges that cloud computing offers to traditional vendors, outlining the key comparisons between traditional and cloud development. It doesn't stike me as particular new or insightful, but it is clear and accurate, so my guess is that this makes it worth reading - one usually underestimates how much effort it takes to articulate the zeitgeist clearly.

11 June 2010

TSSG wins first place in Ericsson Mobile Application Awards

I am very happy to announce that a team of programmers from the TSSG have won first place in the Ericsson Mobile Application Awards. The global student competition generated over 700 registered users, 120 registered teams from 28 countries worldwide and the involvement of 1000 end-users globally. The jury selected the winners yesterday at the Nordic Mobile Developer Summit in Stockholm.

Pictured above is Robert Mullins and the two part-time students Kieran Ryan and Mark Williamson who are both members of the TSSG and students on the TSSG-sponsored MSc Communications Software in Waterford Institute of Technology.

The winning entry has an associated YouTube video and was based on the development of a "Caller Profiler application".

This success demonstrates the capability within the TSSG to translate from a high level research interest in telecommunications networks and services, through to real software development that can have an impact on industry, with a link back to WIT's teaching curriculum, in particular with specialised targeted MSc (taught) course offerings designed to enable professional development of specialist software skills.

Robert Mullins led the TSSG's engagement in Enterprise Ireland funded ILRP IMS-ARCS project that built enablers for next generation IMS services. The development work for the competition was enabled by Enterprise Ireland funding linked to one of the IMS-ARCS industrial members Vennetics as partner.

We are grateful to Ericsson for the opportunity to take part in this competition. Ericsson, both in Ireland and in Sweden, work closely with the TSSG in supporting our Next Generation Network TSSG Centre (funded by the TSSG, Enterprise Ireland, and Science Foundation Ireland), and have worked with us on many leading edge research projects.

Well done to the winning team!

UPDATE 2010-06-14 - Video Interview

A video interview with the winning three teams, the TSSG team as overall winners are third in the sequence starting at timestamp 2:20:

UPDATE 2010-06-17 - TSSG Press Release

The official press release on the Applications Award win was released today: TSSG Press Release

8 January 2010

Snow Business is Web Business

Netcraft have posted an analysis showing that the UK National Rail website is suffering under the increased traffic load as worried comuters and travellers check the availability of their routes: National Rail website affected by snow - Netcraft.

26 August 2009

RSS vs Social Media - is RSS losing out?

In an interesting post Patricio Robles discusses why RSS usage is dropping of in the USA and wonders about the equivalent rise in the use of specific social media platforms such as Facebook and Twitter Is RSS dead? | Blog | Econsultancy.

He concludes the RSS never was mainstream, and that it still serves a very useful function that will not go away any time soon.

I'd agree, adding that we are actually taking about the different flavours of RSS and Atom here. Personally I use RSS/Atom every day, and have started to use Twitter quite a bit. I use tools - like ping.fm - (often enabled with Jabber/IM and RSS) to update Facebook rather than using Facebook directly. Similarly with Yammer, MySpace, LinkedIn, Plaxo Pulse, and Flickr). Life's too short to be logging into all of these individually to update status, or to micro-blog. Also, I still value the more thoughtful composition required for a full (even if short) blog entry, compared to a short 140 character tweet. Thus I view the social media platforms as potential hooks to engage people, rather than as my real focus.

3 August 2009

Tynt tracks web copy and paste

A new product called Tracer from a Canadian start-up company Tynt allows web site owners to track how their content is being copied and pasted. Measuring reader engagement by how often they copy and paste - Nieman Journalism Lab

Tynt homepage.

5 July 2009

X/HTML5 versus XHTML2

In this post last April (that I've only just found), Dave Shea explains why he's moving back to HTML 4.01 strict from XHTML: mezzoblue - Switched.

This is newsworthy, as the W3C have just announced the effective merger of the XHTML2 and HTML5 efforts, as the former's charter expires at the end of this year. It's not that XHTML will go away, but the XHTML2 efforts will be de-emphasised, and having an XHTML compatible version for HTML5 will become the priority.

From W3C News Archive 2009-07-02

2009-07-02: Today the Director announces that when the XHTML 2 Working Group charter expires as scheduled at the end of 2009, the charter will not be renewed. By doing so, and by increasing resources in the HTML Working Group, W3C hopes to accelerate the progress of HTML 5 and clarify W3C's position regarding the future of HTML. A FAQ answers questions about the future of deliverables of the XHTML 2 Working Group, and the status of various discussions related to HTML. Learn more about the HTML Activity.

See also:

- Zeldman: XHTML DOA WTF (with extensive comments and links)

- X/HTML 5 versus XHTML 2

13 March 2009

QZHTTP

February 2009 Web Server Survey - Netcraft

It is interesting to note the massive impact, on statistics about on-line websites held by Netcraft.com, that one large Chinese hosting site, the Qzone blogging service, has had.

In the February 2009 survey we received responses from 215,675,903 sites. This reflects a phenomenal monthly gain of more than 30 million sites, bringing the total up by more than 16%.This majority of this month's growth is down to the appearance of 20 million Chinese sites served by QZHTTP. This web server is used by QQ to serve millions of Qzone sites beneath the qq.com domain.

QQ is already well known for providing the most widely used instant messenger client in China, but this month's inclusion of the Qzone blogging service instantly makes the company the largest blog site provider in the survey, surpassing the likes of Windows Live Spaces, Blogger and MySpace.

The web server they use, their own customized software called QZHTTP, is now the 3rd most popular web server in the world, after Apache and MS IIS, with just shy of a 10% share of the server market; that's real impact! I guess this is just the beginning of this type of phenomenon as the Chinese start to impact on many such on-line statistics.

23 October 2008

W3C TPAC 2008, Nice

I am at the Advisory Committee meeting of the W3C in Mandelieu, near Nice in France. As usual it is a great buzz with loads of people trying to progress web standards and related standards. The rules of the meeting are such that I cannot blog directly about the contents of the meeting itself - fair enough!

However, the AC (Advisory Committee) meeting is co-located with a TP (Technical Plenary) meeting, many aspects of the latter are more public. Starting at the public W3C TPAC 2008 web page, or the news posting on the W3C front page, will lead to the stuff that is public. Some staff members are also blogging here.

28 July 2008

Google Estimate Over 1 Trillion Web Pages

Thanks to CircleID: Google Says Its Counting Over 1 Trillion Unique Pages on the Web?

"We've known it for a long time: the web is big. The first Google index in 1998 already had 26 million pages, and by 2000 the Google index reached the one billion mark. Over the last eight years, we've seen a lot of big numbers about how much content is really out there. Recently, even our search engineers stopped in awe about just how big the web is these days—when our systems that process links on the web to find new content hit a milestone: 1 trillion (as in 1,000,000,000,000) unique URLs on the web at once!"

It has been noted however that Google does not index all 1 Trillion web pages (see Michael Arrington)

27 November 2007

Tim Bray on Communication

Tim Bray posts On Communication, a good read. I would add printing to the list of key communications technologies dating from maybe 1430 so making it 577 in Tim's table.

22 November 2007

Tim on the Net, the Web and the Graph of social inter-relations

When Tim blogs we listen, as he has a great way of simplifying complex arguments down to easily understandable metaphors, that just might change the world, again..... Giant Global Graph | Decentralized Information Group (DIG) Breadcrumbs

Semantic Web as a Shadow Web?

Ian Davis posted this useful comment on W3C semantic web activity and the value of keeping activity in the mainstream with many eyes to give feedback and updates on metadata Internet Alchemy: Is the Semantic Web Destined to be a Shadow?

7 November 2007

Håkon Wium Lie: How web fonts can change the face of the web

In an interesting ligtening talk at W3C TP this afternoon Håkon Wium Lie described "How web fonts can change the face of the web." He showed that there are very few fonts one can reliably assume are on a client machine. He then showed how CSS 2.0 can reference on-line server-side fonts that can be dynamically downloaded when a web page is viewed.

W3C TPAC Boston Nov 2007

I'm here in Cambridge MA, looking across at the Boston skyline from the other side of the Charles River at the World Wide Web Consortium's (W3C's) TPAC (Technical Plenary/Advisory Committee) joint meeting.

It's a stimulating environment with lots of working groups reporting on their activities, and with parallel meetings before and afterwards to progress these working groups.

As usual I'm impressed by the quality if the on-line collaboration tools allowing people around the world to take part even if they aren't here physically.

Web technologies are effectively a universal layer for services allowing ligher wieight and heavier weight approaches to service/applications development for unbiquitous access from many types of devices with network connectivity.

2 October 2007

Ubiquitous Web Applications

I am the TSSG's Advisory Council member for the World Wide Web Consortium (W3C). At our last meeting in Banff Canada (May 2007) I had the pleaseure of meeting, among others, Dave Raggett. He chairs the Ubiquitous Web Applications Activity in the W3C.

On the UWA blog, I noticed that Dave had delivered the opening talk on "The Web of Things" at the UWE Web Developer's Conference in Bristol, UK on 26 September 2007.

Abstract:

A look at the origins of the Web, how it has evolved, and the challenges in extending it to the Web of things as the number and variety of networked devices explodes. Changing the way we conceive of the Web. Why today's hacks will give way to more structured approaches to developing applications that allow developers to focus on what the application should do rather than the details of exactly how.

I am sorry to have missed this talk, but I could read the slides which take about 10-15 minutes to read through: Dave Raggett Slides - Web of Things

Personally, I think the arguments for declarative development (e.g. HTML, XML, ...) over procedural language development (e.g. Java, JavaScript, AJAX, ...) are very strong and will win out in the medium to long term for mobile web development. This slideset explains why. Declarative standards for the web expanding to cover more areas, particularly to enable flexibility of mobile devices as limited as a remote control, are one potential future with many interesting options. It's all about making the infrastructure simple, and lower the barriers to entry for programing, just as the web has already done for desktop applications (introducing so called "web time" and slashing development costs for distributed systems).

Interestingly I could give almost the same talk as Dave did, with a different focus on how IPv6 can help solve the problems at a lower layer. It'll take both to really achieve both the "Web of things" and the "Internet of things".

11 June 2007

Privacy International: Google Scores Badly

There has been quite a buzz on the blogsphere for the past few weeks over the report on the privacy issues of Internet services produced by Privacy International entitled "A Race to the Bottom - Privacy Ranking of Internet Service Companies".

A good summary is provided in this article: Privacy International pokes a stick in Google’s eye by ZDNet's Dan Farber -- Privacy International has poked Google in the eye with the stick. In an interim report on the privacy ranking of the major Internet services, Google was the only company found among those surveyed to receive a failing grade, which Privacy International described as conducting comprehensive consumer surveillance and having entrenched hostility to privacy. [...]

To quote part of the report that addresses Google:

- Google account holders that regularly use even a few of Google's services must accept that the company retains a large quantity of information about that user, often for an unstated or indefinite length of time, without clear limitation on subsequent use or disclosure, and without an opportunity to delete or withdraw personal data even if the user wishes to terminate the service.

- Google maintains records of all search strings and the associated IP-addresses and time stamps for at least 18 to 24 months and does not provide users with an expungement option. While it is true that many US based companies have not yet established a time frame for retention, there is a prevailing view amongst privacy experts that 18 to 24 months is unacceptable, and possibly unlawful in many parts of the world.

- Google has access to additional personal information, including hobbies, employment, address, and phone number, contained within user profiles in Orkut. Google often maintains these records even after a user has deleted his profile or removed information from Orkut.

- Google collects all search results entered through Google Toolbar and identifies all Google Toolbar users with a unique cookie that allows Google to track the users' web movement. Google does not indicate how long the information collected through Google Toolbar is retained, nor does it offer users a data expungement option in connection with the service.

- Google fails to follow generally accepted privacy practices such as the OECD Privacy Guidelines and elements of EU data protection law. As detailed in the EPIC complaint, Google also fails to adopted additional privacy provisions with respect to specific Google services.

- Google logs search queries in a manner that makes them personally identifiable but fails to provide users with the ability to edit or otherwise expunge records of their previous searches.

- Google fails to give users access to log information generated through their interaction with Google Maps, Google Video, Google Talk, Google Reader, Blogger and other services.

30 May 2007

Zinadoo gets praised in the blogsphere

It is good to see the TSSG campus company Nubiq, who have a mobile web site generator called Zinadoo, getting some good coverage in the blogsphere Mobile Web 1.0 again and again and again. It really is very easy to setup a mobile website using Zinadoo.

21 May 2007

Web 2.0 AJAX portal start pages

In the TSSG we have been tracking a number of technologies that converge around the idea of the web browser as a flexible docking station for various applications. I am borrowing for a lot that my colleague Eamonn de Leastar has investigated for this posting. The source of these innovations stems from the desire to create a "My X", such as "My Netscape" or the Google customised home page.

You could say this trend for personalised portals started with the Netscape portal idea of the late 1990s that led to the original RSS 0.90 (Rich Site Summary) in 1999. See this History of RSS if you're interested in that journey. There are things before RSS 0.90, but they weren't called RSS (most importantly Dave Winner's Scripting News format). Since then the alternative Atom format has been developed and standardised in the IETF. This history is also linked to the early W3C semantic web standard RDF (Resource Description Framework), and some RSS versions are subsets of RDF.

So the basic idea was that these feed formats could be used to allow syndication and aggregation of content across many different types of content sources such as newspapers and later blogs, but including weather and other sorts of information flows.

The bigger picture with portals is to allow functionality to be bundled into widgets (small sets of functionality) that can allow a host platform to grow through the use of 3rd party information sources (mini-programs). So the feed is the basic low-level entry point here, a simple flow of textual data marked up with XML in RSS or Atom into headline, content and link to original source. The more complex widgets can then become the requirement becomes for a program rather than just an XML parser to allow the widget to function.

The other concept that has become very important recently has been client-side scripting sometimes called AJAX (Asynchronous JavaScript and XML), though it doesn't necessarily require JavaScript or even XML to be branded AJAX. Basically it's about using clever client-side web programming techniques to improve the end-user experience, often by pre-fetching server-side data before the user explicitly requests it. Very clever AJAX solutions are emerging that can handle disconnection from the network for periods of time.

One alternative framework to JavaScript is that of Adobe Flash, most commonly associated with multimedia content, but now being used as a light deployment platform (to those with browsers with a Flash plug-in). The latest innovation here is Adobe's Apollo.

The most recent clutch of portal sites are showing some startling effects. Of particular interest where these two:

The former is very slick, the latter is intriguing - have a look at this calendar widget.

It looks like a standard calendar widget. However, look the four buttons along the top, one of them is "Copy to Desktop". i.e. If you have installed the Adobe Apollo engine on your PC (a flash host of some kind) then there is no distinction between these widgets appearing on your desktop or in your browser.

There is a somewhat similar effect in the latest meebo. The IM (Instant Messaging) client emulation has a button which apparently permits the IM window to escape from the browser and live on decoupled. It is in fact another browser instance - but so customised that it looks more or less like Exodus (the open IM Jabber client). No downloads required of course.

Finally, AB5k (Widgets for the World) is the first Java based widgets framework taking advantage of the major (perhaps Eclipse inspired) renovation and improvement of Swing these past 2-3 years. This is currently in pre-alpha but it might have potential once it gets going.

All of these seem a good bit more sophisticated that Google, Yahoo! or netvibes. Whether they are just toys or not remains to be seen...

16 May 2007

Netcraft Tracks over 600,000 SSL websites

Netcraft's survey of SSL sites has now been running for over ten years. The first survey, in November 1996, found just 3,283 sites; since then, the number of SSL sites has had an average compound growth of 65% per annum.

The survey is a good guide to the growth of online trading and services. The survey counts sites by collecting SSL certificates; each distinct, valid SSL certificate is counted in the results. Each SSL certificate typically represents one company's details, and each certificate must be approved by a certificate authority, so the data is typically more consistent and less volatile than other attributes of the Internet's infrastructure.

Netcraft: Internet Passes 600,000 SSL Sites.

This is many fewer than the total number of websites, current Netcraft web server survey, as I access it now in May 2007 it reads 118,023,363 sites.

7 May 2007

W3C Advisory Committee (Banff, Alberta, Canada)

I have to admit I am impressed by the setting for the W3C Advisory Committee (AC) meeting this week in the Fairmont Hotel, Banff National Park, Alberta, Canada.

We're just gathering now in the 8am-9am coffee/breakfast/registration slot and there's a good good buzz about the place. Having seen the range of the discussions on the agenda I'm looking forward to a productive meeting.

Phase 1 of my involvement in web technologies began when I first heard about the web at the NSC92 conference (Network Services Conference) November 1992 in Pisa, Italy, and went back to University College Galway (UCG), now called NUI Galway, where I worked in computer services, very enthused. Within a year I had my own webserver and I was the webmaster for UCG's first website. I was very happy when the front page image from the website, a picture of the old quadrangle in UCG, was featured in the Irish Times in an article about the emerging web with the title "The West's Awake" (a reference to a famous Irish rebel song), I think it was around 1994. At the time we would email some guys in UCD in Dublin running a server called Slarti (a reference to the Hitch-Hiker's Guide to the Galaxy character), where a list and a map of active Irish websites was maintained - my personal server and the UCG server are still listed in this list as are the servers of other UCG web-heads many still active including Joe Desbonet and John Breslin. I ended up getting really into Perl and CGI programming a published some books on this as well as being a webmaster in the first iteration of the web.

Phase 2 of my engagement in web technologies began when I moved to Waterford Institute of Technology back in 1996, and got involved in some EU funded projects linked to the Telecommunications Software & Systems Group (TSSG) there. I became really enthused by the whole content aggregation technology suite, with the early versions of RSS from Netscape in the late 1990s, and later with weblogging/blogging, and the first version of this blog (Greymatter then MovableType).

The third phase of my web technology engagement has been via the telecommunications management work in the TSSG, based on the use of emerging semantic modelling techniques, in particular OWL-based solutions, and on applying these to the telecommunications network and service management space in the TeleManagement Forum ((TM Forum) and in the new Autonomic Communications Forum (ACF) linked to our research programme on Autonomic Management of Communications of Networks and Services.

So coming to the W3C for the first time is a bit like coming home for me, even though the TSSG only joined the W3C very recently, and this is my first AC meeting.

The Advisory Committee meeting is co-located with the WWW2007 conference, and I'll be staying on for two days of that before returning to Ireland.

31 March 2007

26 March 2007

Karen Spärck Jones - ACM/AAAI Newell Award 2006

I have just heard that my MPhil mini-thesis supervisor, Professor Karen Spärck Jones, of the Computer Laboratory in the University of Cambridge (UK) has been awarded the joint ACM and AAAI Newell Award for her work on natural language processing ACM: Press Release, March 22, 2007.

Congratulations, I am a proud alumnus of the taught masters course she co-founded with the late Professor Frank Fallside, an innovative linkage of computer science and engineering, "Computer Speech and Natural Language Processing", where we did Hidden Markov Models and Neural Networks side-by-side with Prolog and LISP in the late-1980s. I believe that where I now work in the TSSG in Waterford Institute of Technology also captures the creative energy of working where disciplines intermingle, here with telecommunications engineering and Internet technologies.

The notice also mentions that Karen is being given two further awards:

The Athena Lecturer Award, given by the ACM Committee on Women in Computing (ACM-W) recognizes women researchers who have made fundamental contributions to Computer Science [...and...] the Lovelace Medal, presented by the British Computer Society to those who have made significant contributions to advancing and understanding Information Systems.

Well done Karen, you were an inspiration to me and many other students.

UPDATE: 2007-04-26 Karen Spärck Jones, IR Pioneer, Winner of Two ACM Awards Karen Spärck Jones, recently named as the recipient of ACM/AAAI's Allan Newell Award and ACM-W's Athena Lecturer Award, passed away on April 4. As we say in Irish "Ní bheidh a leithid arís ann" - "We will not see her likes again".

Mark Pilgrim and W3C Standards

Mark Pilgrim has been hired by Google, in his own words (Mon 19th March 2007):

There are two basic visions of the future of the web, and one of them is wrong. I'm going to work on the right one for a while. At Google. Starting today.

In terms of clarifying this here is an older posting of his on W3C standards: W3C and the Overton window [dive into mark].

23 March 2007

Watching the web grow up (Mar 8th 2007; Economist)

This recent Economist article gives a good overview of what's exciting about the mobile web and the semantic web: | Watching the web grow up It is based on discussions with Tim Berners-Lee.

23 February 2007

SatNav causes chaos

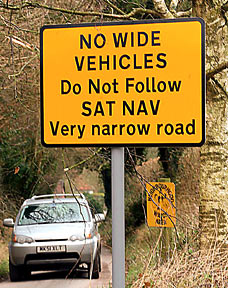

An article from The Mail On Sunday quoted a resident of Exton (a small town in England), Brian Thorpe-Tracey as saying:

About two years ago we noticed a real increase in drivers using the lane. Vehicles are getting stuck and having to reverse back up, damaging the wall and fence. There's even a piece of metal embedded 12ft up in a tree which looks like it's come off a lorry. When I've asked drivers why they are using the lane they say they are just following satnav.

Thanks to Brady Forest of O'Reilly Radar for the link. This highlights one of the unforeseen problems with new technologies. Brady suggests in his post that allowing user generated updates to be integrated into the data used by satnav systems could alleviate the problem.

21 February 2007

Connective Knowledge

I came across this interesting article the other day: An Introduction to Connective Knowledge ~ Stephen's Web ~ by Stephen Downes.

It argues that the web has allowed a new way of creating a shared knowledge based on connectivity, and it places this argument within the philosophical debate around meaning.

All very relevant to the semantic web, and an interesting forthright contribution.

How I found the article was interesting in itself. I noticed that John Breslin of DERI was publishing his slide shows on an new slide show sharing service. I browsed the other side shows in this service and found one by Stephen Downes. I liked it, and I then searched for more material by him.... That's the kind of path we can follow these days to locate interesting materials.

22 November 2006

S Stands for Simple (SOAP)

An excellent history of SOAP and web services Pete Lacey's Weblog :: The S stands for Simple - laugh out loud.

15 November 2006

The Internet Sucks

For a different view from the norm, try a dose of this light reading, a very informed critique of the mis-placed idealism associated with much Internet and web promotion: Macleans.ca | Top Stories | Life | Pornography, gambling, lies, theft and terrorism: The Internet sucks. Food for thought indeed.

13 November 2006

Semantic Web and Web 2.0

Thanks to Elyes Lehtihet for drawing my attention to this slideset published on-line: SCAI-2006-keynote.pdf (application/pdf Object). In it Ora Lassila of Nokia (as a keynote talk in SCAI 2006 9th Scandinavian AI conference held at the Helsinki University of Technology, Finland, on October 25-27, 2006) descibes the potential cross-over areas between these two visions, coming from a perspective of a Artificial Intelligience true believer.

9 November 2006

Top 100 Irish Blogs

Well I briefly made it into the top 100 blogs (by inbound links) Justin Mason: Happy Software Prole サ Technorati-ranked Irish Blogs Top 100 but now I'm a gonner.... So it goes.

22 September 2006

XML Schemas - How Are They Being Used?

Paul Kiel posts on O'Reilly's XML.com Profiling XML Schema analysing what features of XML schemas are actually used in practice, and advising on the schema features to avoid. Very interesting.... Not surprisingly the vast majority of schemas analysed stuck to simplicity, to quote from Paul's conclusions "The clearest message is one of simplicity. The most commonly used constructs involve merely creating reusable types, assembling them into sequences of elements, and augmenting them with enumerations. Many of the more complex features went unused."

1 September 2006

Irish Times coverages for Nubiq's Zinadoo launch

A 3CS and TSSG spinout company, Nubiq, is today launching its Zinadoo product for mobile content creation management. This article in today's Irish Times summarises the key points: Irish Times Article - Firm makes mobile websites easy

This is the press relesase from 3CS:

The Centre for Converged Services (3CS) based at the Waterford Institute of Technology (WIT) will see another spin off company come to fruition in September Nubiq Ltd. Funded by Enterprise Ireland, the new campus company, which specialises in community mobile solutions, will launch its first product, zinadoo.com around that time.Zinadoo provides a service that enables end-users to create their own mobile website from their computer. The service provides a full solution to end-users to enable them to take existing website content and create, deploy and manage new mobile services and mobile websites. Users dont have to write software, develop and manage connections to operators networks and gateways, or host, manage and monitor the service.

In addition to mobile website creation, an end user can create their own text services to promote their site, invite people to see it and use it and to build community services. The zinadoo services range from group texting, to text voting and automated text response services, which set it apart from its competitors. It is an easy and effective way for people to express themselves through the creation of websites using their mobile phones.

Since February of this year, sporting clubs such as Gaelic football clubs, golf clubs and more recently the Waterford branch of the Youth Information Services Organisation have taken part in the new mobile services trials. These trials aim to bring mobile services to community groups and SMEs looking for an easier way to keep in touch with friends, family and customers.

Helene Haughney, Chief Executive, Nubiq said: "Until now internet users who create content and services on the web, be it social networking, journaling or using sites for photo distribution, have had no way of doing so for mobile apart from simple blog support.

As a solution to this, we developed zinadoo to be used by individuals, social clubs, high growth SMEs, anyone in fact, to build innovative mobile websites and community services. It allows businesses and communities to communicate using the most effective means available today - the mobile phone."

"So far uptake for this service has been high and the reaction to it from our end users has been very positive. They have found it invaluable for sending out notifications regarding, for example; golf competitions, concerts and match fixtures," Ms. Haughney continued.

Barry Downes, Centre Director, 3CS added: "Using Zinadoo is as easy as listing an item on eBay and as a result it will tap into the latent demand of end-users to use the mobile channel to publish, share information and engage in social networks. It grants users, previously denied by technical and organisational hurdles, access to an easy-to-operate solution, value added services, international communities and limitless business opportunities."

The zinadoo product is being trialled under the EU funded eTen market validation project. Built by the project coordinator Waterford Institute of Technology, other partners include: AePONA (UK), Aceno (Ireland), Fraunhofer Institute FOKUS (Germany), OTEPlus (Greece) and Telefonica I&D (Spain).

The Centre for Converged Services (www.3CS.info) main area of research is convergent software services for next generation networks such as IMS (IP Multimedia Subsystem). 3CS is associated with the Telecommunication Software and Systems Group (TSSG) at WIT and also Fraunhofer Institute FOKUS, in Berlin. 3CS has expertise in the areas of IMS services, Internet Information Management and Syndication (RSS/ATOM) systems, architectures and services, mobile multimedia and Web 2.0 services and frameworks. 3CS currently has 12 active research projects, the funding for which has been won through competitive tenders for national and international research funding.

According to Barry Downes "Nubiq is the first of a number campus companies that 3CS is developing based on its research agenda and its collaborative relationships with industry and the research community. I look forward to Nubiqs success and future technology transfers based on 3CSs research that will benefit the Irish economy".

For further information please contact:

Hélène Haughney

Tel: 051 302974

www.nubiq.com

26 August 2006

Roy Fielding interviewed by Jon Udell

Jon Udell: A conversation with Roy Fielding about HTTP, REST, WebDAV, JSR 170, and Waka

As one of the prime movers in the W3C and the inventor of the term REST (Representational State Transfer) Roy is definitely worth listening to.

23 August 2006

Map of Irish Buying Habits

John Breslin posts adverts.ie Map at Cloudlands on a map (Google Maps mash-up) of how people are buying and selling via adverts.ie

he also includes a table of co-rodinates of Irish counties - useful.....

22 August 2006

Gartner Hype Cycle 2006 (Predictions)

Gartner's 2006 Emerging Technologies Hype Cycle Highlights Key Technology Themes

1. Web 2.0Web 2.0 represents a broad collection of recent trends in Internet technologies and business models. Particular focus has been given to user-created content, lightweight technology, service-based access and shared revenue models. Technologies rated by Gartner as having transformational, high or moderate impact include:

Social Network Analysis (SNA) is rated as high impact (definition: enables new ways of performing vertical applications that will result in significantly increased revenue or cost savings for an enterprise) and capable of reaching maturity in less than two years. SNA is the use of information and knowledge from many people and their personal networks. It involves collecting massive amounts of data from multiple sources, analyzing the data to identify relationships and mining it for new information. Gartner said that SNA can successfully impact a business by being used to identify target markets, create successful project teams and serendipitously identify unvoiced conclusions.

Ajax is also rated as high impact and capable of reaching maturity in less than two years. Ajax is a collection of techniques that Web developers use to deliver an enhanced, more-responsive user experience in the confines of a modern browser (for example, recent version of Internet Explorer, Firefox, Mozilla, Safari or Opera). A narrow-scope use of Ajax can have a limited impact in terms of making a difficult-to-use Web application somewhat less difficult. However, Gartner said, even this limited impact is worth it, and users will appreciate incremental improvements in the usability of applications. High levels of impact and business value can only be achieved when the development process encompasses innovations in usability and reliance on complementary server-side processing (as is done in Google Maps).

Collective intelligence, rated as transformational (definition: enables new ways of doing business across industries that will result in major shifts in industry dynamics) is expected to reach mainstream adoption in five to ten years. Collective intelligence is an approach to producing intellectual content (such as code, documents, indexing and decisions) that results from individuals working together with no centralized authority. This is seen as a more cost-efficient way of producing content, metadata, software and certain services.

Mashup is rated as moderate on the Hype Cycle (definition: provides incremental improvements to established processes that will result in increased revenue or cost savings for an enterprise), but is expected to hit mainstream adoption in less than two years. A "mashup" is a lightweight tactical integration of multi-sourced applications or content into a single offering. Because mashups leverage data and services from public Web sites and Web applications, they池e lightweight in implementation and built with a minimal amount of code. Their primary business benefit is that they can quickly meet tactical needs with reduced development costs and improved user satisfaction. Gartner warns that because they combine data and logic from multiple sources, they池e vulnerable to failures in any one of those sources.

2. Real World Web

Increasingly, real-world objects will not only contain local processing capabilities妖ue to the falling size and cost of microprocessors傭ut they will also be able to interact with their surroundings through sensing and networking capabilities. The emergence of this Real World Web will bring the power of the Web, which today is perceived as a "separate" virtual place, to the user's point of need of information or transaction. Technologies rated as having particularly high impact include:

Location-aware technologies should hit maturity in less than two years. Location-aware technology is the use of GPS (global positioning system), assisted GPS (A-GPS), Enhanced Observed Time Difference (EOTD), enhanced GPS (E-GPS), and other technologies in the cellular network and handset to locate a mobile user. Users should evaluate the potential benefits to their business processes of location-enabled products such as personal navigation devices (for example, TomTom or Garmin) or Bluetooth-enabled GPS receivers, as well as WLAN location equipment that may help automate complex processes, such as logistics and maintenance. Whereas the market sees consolidation around a reduced number of high-accuracy technologies, the location service ecosystem will benefit from a number of standardized application interfaces to deploy location services and applications for a wide range of wireless devices.

Location-aware applications will hit mainsteam adoption in the next two to five years. An increasing number of organizations have deployed location-aware mobile business applications, mostly based on GPS-enabled devices, to support queue business processes and activities, such as field force management, fleet management, logistics and good transportation. The market is in an early adoption phase, and Europe is slightly ahead of the United States, due to the higher maturity of mobile networks, their availability and standardization.

Sensor Mesh Networks are ad hoc networks formed by dynamic meshes of peer nodes, each of which includes simple networking, computing and sensing capabilities. Some implementations offer low-power operation and multi-year battery life. Technologically aggressive organizations looking for low-cost sensing and robust self-organizing networks with small data transmission volumes should explore sensor networking. The market is still immature and fragmented, and there are few standards, so suppliers will evolve and equipment could become obsolete relatively rapidly. Therefore, this area should be seen as a tactical investment, as mainstream adoption is not expected for more than ten years.

3. Applications Architecture

The software infrastructure that provides the foundation for modern business applications continues to mirror business requirements more directly. The modularity and agility offered by service oriented architecture at the technology level and business process management at the business level will continue to evolve through high impact shifts such as model-driven and event-driven architectures, and corporate semantic Web. Technologies rated as having particularly high impact include:

Event-driven Architecture (EDA) is an architectural style for distributed applications, in which certain discrete functions are packaged into modular, encapsulated, shareable components, some of which are triggered by the arrival of one or more event objects. Event objects may be generated directly by an application, or they may be generated by an adapter or agent that operates non-invasively (for example, by examining message headers and message contents).EDA has an impact on every industry. Although mainstream adoption of all forms of EDA is still five to ten years away, complex-event processing EDA is now being used in financial trading, energy trading, supply chain, fraud detection, homeland security, telecommunications, customer contact center management, logistics and sensor networks, such as those based on RFID.

Model-driven Architecture is a registered trademark of the Object Management Group (OMG). It describes OMG's proposed approach to separating business-level functionality from the technical nuances of its implementation The premise behind OMG's Model-Driven Architecture and the broader family of model-driven approaches (MDAs) is to enable business-level functionality to be modeled by standards, such as Unified Modeling Language (UML) in OMG's case; allow the models to exist independently of platform-induced constraints and requirements; and then instantiate those models into specific runtime implementations, based on the target platform of choice. MDAs reinforce the focus on business first and technology second. The concepts focus attention on modeling the business: business rules, business roles, business interactions and so on. The instantiation of these business models in specific software applications or components flows from the business model. By reinforcing the business-level focus and coupling MDAs with SOA concepts, you end up with a system that is inherently more flexible and adaptable.

Corporate Semantic Web applies semantic Web technologies, aka semantic markup languages (for example, Resource Description Framework, Web Ontology Language and topic maps), to corporate Web content. Although mainstream adoption is still five to ten years away, many corporate IT areas are starting to engage in semantic Web technologies. Early adopters are in the areas of enterprise information integration, content management, life sciences and government. Corporate Semantic Web will reduce costs and improve the quality of content management, information access, system interoperability, database integration and data quality.

典he emerging technologies hype cycle covers the entire IT spectrum but we aim to highlight technologies that are worth adopting early because of their potentially high business impact,� said Jackie Fenn, Gartner Fellow and inventor of the first hype cycle. One of the features highlighted in the 2006 Hype Cycle is the growing consumerisation of IT. 溺any of the Web 2.0 phenomenon have already reshaped the Web in the consumer world�, said Ms Fenn. 鼎ompanies need to establish how to incorporate consumer technologies in a secure and effective manner for employee productivity, and also how to transform them into business value for the enterprise�.

The benefit of a particular technology varies significantly across industries, so planners must determine which opportunities relate most closely to their organisational requirements. To make this easier, a new feature in Gartner痴 2006 hype cycle is a 叢riority matrix� which clarifies a technology痴 potential impact - from transformational to low � and the number of years it will take before it reaches mainstream adoption. 典he pairing of each Hype Cycle with a Priority Matrix will help organisations to better determine the importance and timing of potential investments based on benefit rather than just hype,� said Ms Fenn.

2006 Hype Cycle for Emerging Technologies

Note to editors: More information on each of the technologies identified in the emerging technologies hype cycle and on the priority matrix can be obtained from Gartner PR.

Despite the changes in specific technologies over the years, the hype cycle's underlying message remains the same: Don't invest in a technology just because it is being hyped, and don't ignore a technology just because it is not living up to early expectations.

釘e selectively aggressive � identify which technologies could benefit your business, and evaluate them earlier in the Hype Cycle�, said Ms. Fenn. 擢or technologies that will have a lower impact on your business, let others learn the difficult lessons, and adopt the technologies when they are more mature.�

About the Gartner 2006 Hype Cycles

The 滴ype Cycle for Emerging Technologies, 2006� report is one of 78 hype cycles released by Gartner in 2006. More than 1,900 information technologies and trends across more than 75 industries, technology markets, and topics are evaluated by more than 300 Gartner analysts in the most comprehensive assessment of technology maturity in the IT industry. Gartner's hype cycles assess the maturity, impact and adoption speed of hundreds of technologies across a broad range of technology, application and industry areas. It highlights the progression of an emerging technology from market over enthusiasm through a period of disillusionment to an eventual understanding of the technology's relevance and role in a market or domain. Additional information regarding the hype cycle reports is available on Gartner痴 Web site at http://www.gartner.com/it/docs/reports/asset_154296_2898.jsp.

Each Hype Cycle Model follows five stages:

1. "Technology Trigger"

The first phase of a Hype Cycle is the "technology trigger" or breakthrough, product launch or other event that generates significant press and interest.2. "Peak of Inflated Expectations"

In the next phase, a frenzy of publicity typically generates over-enthusiasm and unrealistic expectations. There may be some successful applications of a technology, but there are typically more failures.3. "Trough of Disillusionment"

Technologies enter the "trough of disillusionment" because they fail to meet expectations and quickly become unfashionable. Consequently, the press usually abandons the topic and the technology.4. "Slope of Enlightenment"

Although the press may have stopped covering the technology, some businesses continue through the "slope of enlightenment" and experiment to understand the benefits and practical application of the technology.5. "Plateau of Productivity"

A technology reaches the "plateau of productivity" as the benefits of it become widely demonstrated and accepted. The technology becomes increasingly stable and evolves in second and third generations. The final height of the plateau varies according to whether the technology is broadly applicable or benefits only a niche market

19 August 2006

MIDA Marine Irish Digital Atlas

The Marine Irish Digital Atlas - Spatial Ireland

The Marine Irish Digital Atlas (MIDA) has been officially launched. It provides information about geographically referenced data for the island痴 marine and coastal areas. The Atlas displays vector and raster datasets, associated metadata and relevant multimedia.

30 July 2006

Dave Johnson: Beyond Blogging: Understanding feeds and publishing protocols

Tim Bray's post alerted me to this excellent slideset explaining feeds, giving an excellent clear history of RSS and Atom.

TriXML2006-BeyondBlogging.pdf (application/pdf Object) "Beyond Blogging: Understanding feeds and publishing protocols" (Dave Johnson, Sun Microsystems, 2006).

12 July 2006

Barcodepedia

This site processes images of barcodes you upload Barcodepedia.com - the online barcode database. Cool. No wonder it's getting Slashdotted.

19 June 2006

Auto-processing Texts for geographical References

This looks like a very interesting concept: O'Reilly Radar >Gutenkarte: Geo annotation of Gutenberg texts. A sample map generated: Thucydides' classic The History of the Peloponnesian Wars. Just think, you could do with thrillers set in a city and track the chase scenes on a map! Excellent stuff.

8 June 2006

NESSI General Assembly

I am in Brussels at the NESSI General Assembly. This is a new type of process being promoted by the European Commission in advance of the new 7th Framework Programe, the next phase of the EC research funding process (due to formally cover 2007-2013). The idea is to allow key commercial interests to form a "Technology Platform" providing a forum for companies to meet and define a common research agenda that defines roadmap(s) addressing the strategic development of the industrial sector in Europe (primarily the EU 25 member states).

NESSI, Networked European Software Services Initiative, is one of these technology platforms (or ETPs), currently comprising 22 core partners from four types of organisation: industry, SMEs (Small and Medium-sized Enterprises) academia, user group representation.

As of now the overall strategic objectives have been documented SRA (Strategic Research Agenda) Volume 1: Framing the future of the service oriented economy and further documents are in progress.

It is certain that the general move of Information Communication Technologies (ICT) towards services is a global technology trend. The ambitious aim of this initiative is to help define this trend in an integrative way that encompases end users, technologists (academic and indistrial) and solutions providers.

6 June 2006

OneThousandPaintings

Sala had sold only 106 paintings in 110 days. Not even one painting per day. Then he sold 315 in two days!

Cheapest one is now USD 160 plus p&p!

26 May 2006

O'Reilly Trademarks "Web 2.0"!

Tom Raftery on O'Reilly trademarks 'Web 2.0' and sets lawyers on IT@Cork!

25 May 2006

Mobile Gaming

Brady on Mobile Gaming O'Reilly Radar > Where 2.0: Pixie Hunt - looks like it is really starting to hot up as a topic. I hope we can get involved from the TSSG in designing and developing some good mobile games with a location-based element.

Bernie at EdTech 2006

Bernie's at EdTech 2006: IrishEyes: Sound education in your pocket

24 May 2006

Sphere could be the next big thing in blog search

Brady on Sphere's Blog Search.

Sphere launched a month ago, but i only just got to really check it out last week when i sat down with tony conrad, ceo and founder (Tony was previously involved in Oddpost and is an advisor for Automattic). The technical team is the crew that brought us Waypath.com - an early blog search engine.

Sphere is an impressive blog search engine and one that is sure to rise in traffic. In a very short time it has already reached feedster's traffic levels and surpassed Pubsub (they have a while to go before reaching Technorati).

As they build their index they are focusing on avoiding splogs and pulling in quality (reminds me of techmeme.com's approach). Their index allows for a search to run over a 4 month period and they have a very useful UI element that allows you to see the post distribution for your query. You can use this tool to focus your search on a custom date range (the default for a search is a week).

17 May 2006

Confluence, Oblix, Oracle Ummmm

I stumbled across this interesting article by Sekhar Sarukkai & David Cohen on Web services and the forgotten OSI Layer Six (of a company called Confluence posted in Spetember 2003). I quite liked the idea of describing managaement in terms of the OSI layers, and linking network managament at the lower layers with application management at the higher layers. In fact, although we do this in the TSSG, I had rarely seen a big picture statement like this putting things in context.... I went to read the whitepapers from the company, only to discover that they had been acquired by Oblix in 2004, and then Oblix had subsequently acquired by Oracle in 2005. Pity I didn't spot that article in 2003, I might have been onto something :-) So, now the technology has been integrated into Oracle's identity server (COREid), as described neatly by Pamela Dingle in Oracle COREid Tidbits:Oracle’s Identity suite is very exciting! The products that are part of this suite (at least the ones that interest me) are:

- Xellerate - aquired from Thor

- COREid - aquired from Oblix

- OWSM (Oracle Web Services Manager) - aquired from Oblix who aquired it through Confluence (iirc)

- Virtual Directory - aquired from OctetString

The good news it that this is a spectacular set of functionality. The bad news is that you will NEVER FIND what you need on their godawful website. So - here is my “frequently wished for but rarely found” list:

....For some wacky reason, the COREid stuff is considered to be logically part of the Oracle Application Server. I know, that makes no sense, since the core service does not run in a container, and it is perfectly possible to run COREid and never have anything to do with the Application Server. Still, for browsing purposes, this information is CRITICAL.

16 May 2006

TrafficGuage

Tim O'Reilly on TrafficGauge rocks. Looks like there is a market for dedicated hardware with GPS/Mapping functionality.

Atom Newsreel

Tim Bray on Atom Newsreel:I’ve been accumulating things Atomic to write about for a while, so here goes. Item: You’ll be able to blog from inside Microsoft Word 2007 via the Atom Publishing Protocol. Item: Sam Ruby has wrangled Planet to the point where it handles Atom 1.0 properly. Item: Along the way, Sam reported a common bug in Atom 1.0 handling, and his comments show it being fixed all over (Planet, MSN, and Google Reader, but not Bloglines of course); the Keith reference in Sam’s title is to this. [Update: Gordon Weakliem extirpates another common bug from the NewsGator universe.] Item: The Movable Type Feed Manager is based on James Snell’s proposed Threading Extensions to Atom 1.0; Byrne Reese seems to think that particular extension is hot stuff. Item: Nature magazine is extending Atom 1.0 for their Open Text Mining Interface. Item: The Google Data APIs are old news now, but it looks like they’re doing Atom 1.0 and playing by the rules. Last Item: Over in the Atom Working Group, we’re getting very close to declaring victory and going for IETF last call on the Protocol document.

28 April 2006

Virtuoso goes Open Source

It has recently been announced that Virtuoso has gone open source.

Here is a weblog announcement: Virtuoso is Officially Open Source! (Kingsley Idehen's Weblog).

This is a detailed history of how the product has evolved: oWiki | Main.VOSHistory

I first heard of this announcement via Jon Udell's A conversation with Kingsley Idehen announcing a podcast interview with Kingsley Idehen, and indeed I'd heard of the prodct becuase of Jon's earlier postings dating back to 2002.

The Open Web

An excellent article on walled gardens and the value of open systems for sharing information/data/knowledge/apis/... and so on: Breaking the Web Wide Open! (complete story) :: AO

About the author of this piece:

Marc Canter is an active evangelist and developer of open standards. Early in his career, Marc founded MacroMind, which became Macromedia. These days, he is CEO of Broadband Mechanics, a founding member of the Identity Gang and of ourmedia.org. Broadband Mechanics is currently developing the GoingOn Network (with the AlwaysOn Network), as well as an open platform for social networking called the PeopleAggregator.

Good morning, Waterford

Great picture from Paul Watson. And what was he doing up so early in the morning you might ask? The answer was catching an early bus to Dublin to atttend the Irish Web 2.0 Conference organised by Enterprise Ireland. Given that Paul works on the TSSG project Feed Henry, probably our most Web 2.0 ish project in our portfolio of 30 or so projects, it was very appropriate for him to attend.

Update: this article describes the conferernce:

Ireland.com piece on Web 2.0

27 April 2006

Apache now leading SSL webserver according to Netcraft

From the monthly Netcraft web server survey Netcraft: Apache Now the Leader in SSL Servers

As the original developers of the SSL protocol, Netscape started out with a lead in the SSL server market. But they were soon overtaken by Microsoft's Internet Information Server, which within a few years held a steady 40-50% of the SSL server market.

Apache has taken much longer to reach the top. Version 1 of Apache did not include SSL support : in the 1990s, US export controls, and the patent on the RSA algorithm in the US, meant that cryptographic support for open source projects had to be developed outside of the US, and were distributed separately. Several independent projects provided SSL support for Apache, including Apache-SSL and mod_ssl; but commercial spin-offs, like Stronghold by c2net (later bought by Red Hat), were more popular at that time.

Now that mod_ssl is included as standard in version 2, Apache has become more popular for hosting secure websites. The total for Apache includes other projects from the ASF including Tomcat, and includes Apache-SSL, but does not include derived products like Stronghold or IBM HTTP Server. c2net/Red Hat includes only Stronghold and Red Hat SWS.

Apache is also gaining from geographical changes. The US, where Microsoft retains a strong lead, used to have over 70% of the Internet's secure websites. Other countries have been catching up, however: countries including Japan and Germany, where Apache is preferred, have faster growth in SSL sites. As ecommerce has caught on in other countries, the US share has been diluted, and is now only 50%.

Netcraft's SSL survey has been running since 1996. It tracks the growing use of secure web servers on the Internet, and the server software, operating systems and certificates that are used. Single user and company subscriptions are available, and custom datasets can be produced on request.

6 April 2006

Jon Udell - a tech writer for web techies

Tim O'Reilly has just published a posting explaining why he, and we all, read Jon Udell's blog postings and excellent articles.

O'Reilly Radar: Nice Recognition for Jon Udell.

I heartily agree with everything Tim says here, and so I thought I'd reproduce it in full with attribution; thanks Tim for using the Creative Commons licence that allows me to do so.

I still have a cherished copy of Practical Internet Groupware, and copies of old BYTE Magazine articles by Udell. He really has charted the rise of Web 1.0 and Web 2.0 in a way no one else has done, in his typically modest fashion. Keep it coming Jon!

Jon Udell writes on his blog: "Folio, the recursively-named magazine for magazine management, has included me in its list of 40 industry influencers, in the Under the Radar category." Here's what they had to say about Jon.

Of course, for active blog readers, Jon is anything but under the radar! Jon's Radio has long been one of the leading technology blogs. But even more striking is just how far ahead of the curve Jon is. His book Practical Internet Groupware, which I published back in 1999, prefigured the whole explosion of interest in what is now called social computing. Jon was also the very first person to articulate (at least for me) the vision of what we're now calling Web 2.0. (He gave a keynote talk on what we now call "the programmable web" at our first Perl Conference in 1997!) Jon was just a bit too early! He's long been one of the people I watch to learn about what comes next.

I wrote a preface to that book (now out of print, although still available on Safari) in which I told the world what I think of Jon Udell. It seems like an appropriate time to repeat what I said then:At O'Reilly & Associates, we have a history of being ahead of the curve. In the mid-'80s, we started publishing books about many of the free software programs that had been incorporated into the Unix operating system. The books we wrote and published were an important element in the spread and use of Perl, sendmail, the X Window System, and many of the programs that have now been collected under the banner of Linux.While the bulk of the book is out of date, Jon's vision of the programmable web and his creative approach to using existing applications in new ways, is still worth reading today.

In 1992, we published The Whole Internet User's Guide and Catalog, the book that first brought the Internet into the public consciousness. In 1993, we launched GNN, the first ever Internet portal, and were the first company to sell advertising on the Web.

In 1997, we convened the meeting of free software developers that led to the widespread adoption of the term Open Source software. All of a sudden, the world realized that some of the most important innovations in the computer industry hadn't come from big companies, but rather from a loose confederation of independent developers sharing their code over the Internet.

In each case, we've managed to expose the discrepancy between what the industry press and pundits were telling us and what the real programmers, administrators, and power users who make up the leading edge of the industry were actually doing. And in each case, once we blew the whistle, the mainstream wasn't far behind.

I like to think that O'Reilly & Associates has functioned as something like the Paul Revere of the Internet revolution.

I tell you these things not to brag, but to make sure you take me seriously when I tell you that I've got another big fish on the line.

Every once in a while a book comes along that makes me wake up and say, "Wow!" Jon Udell's Practical Internet Groupware is such a book.

There are several things that go into making this such a remarkable book.

First, there is the explicit subject: how to build tools for collaborative knowledge management. As we get over the first flush of excitement about the Internet, we want it to work better for us. We're overwhelmed by email, our web searches baffle us by returning tens of thousands of documents and only rarely the ones we want, and our hard disks bulge with documents that we've saved but don't know how to share with other people who might need them.

Jon's book provides practical guidance on how to solve some of these problems by using the overlooked features in modern web browsers that allow us to integrate web pages with the more chaotic flow of conversation that goes on in email and conferencing applications. While much of the book is aimed at developers, virtually anyone who uses the Internet in a business setting can benefit from the perspectives Jon provides in his opening chapters.

How to build effective applications for conferencing and other forms of Internet-enabled collaboration is one of the most important questions developers are wrestling with today. Anyone who wants to build an effective intranet, or to better manage their company's interactions with customers, or to build new kinds of applications that bring people together, will never think about these things in the same way after reading this book.

Second, more than anyone else I know, Jon has thrown off the shackles of the desktop computing paradigm that has shaped our thinking for better part of the last two decades. He works in a world in which the Net, rather than any particular operating system, is truly the application development platform.

All too often, people wear their technology affiliations on their sleeve (or perhaps on their T-shirts), much as people did with chariot racing in ancient Rome. Whether you use NT or Linux, whether you program in Perl or Java or Visual Basic, these are marks of difference and the basis for suspicion. Jon stands above this fragmented world like a giant. He has only one software religion: what works. He moves freely between Windows and Linux, Netscape and Internet Explorer, Perl, Java, and JavaScript, and ties it all together with the understanding that it is the shared Internet protocols that matter.

Any developer worth his salary in tomorrow's market is going to need a cross-platform toolbox much like the one Jon applies in this book.